Why 80% of AI Projects Fail (And How to Be in the 20%)

The Three Silent Killers of AI Projects (And How to De-Risk Them)

Introducing Atomic Agents 2.0 - The Enterprise-Friendly Way to Build AI Agents

Control is All You Need: Why Most AI Systems & Agents Fail in the Real World, and How to Fix It

The numbers are brutal. According to the RAND Corporation, over 80% of AI projects fail: twice the failure rate of non-AI technology projects. MIT's 2025 research found that despite $30-40 billion in enterprise spending on generative AI, 95% of organizations see no business return. Gartner predicts 30% of GenAI projects will be abandoned after proof of concept by end of 2025.

These aren't edge cases. This is the norm.

After 15 years in software development and countless AI implementations, I've watched these failures unfold in real time. The pattern is always the same: teams get excited about a new framework, build impressive demos, then watch their projects collapse when they hit production. But here's what the research tells us: most of these failures are preventable. They stem from predictable causes that can be addressed before you write a single line of code.

This article combines the latest research findings with hard-won lessons from building production AI systems. If you're tired of reading about why AI fails and want to know how to actually succeed, keep reading.

The Research: Why AI Projects Really Fail

Before we can fix the problem, we need to understand it. The RAND Corporation interviewed 65 data scientists and engineers and identified five root causes of AI project failure. What struck me was how few of these are actually technical problems.

1. Misunderstanding the Problem

This is the most common reason AI projects fail. Teams start with the technology and work backward to a problem, rather than the other way around. They ask "what can we do with GPT-4?" instead of "what business problem are we trying to solve?"

As RAND puts it: "Misunderstandings and miscommunications about the intent and purpose of the project are the most common reasons for AI project failure."

The MIT research adds another dimension: "GenAI doesn't fail in the lab. It fails in the enterprise, when it collides with vague goals, poor data, and organizational inertia."

2. Lack of Necessary Data

Organizations often lack the data needed to train effective models. But it goes deeper: 43% of organizations don't even have the right data management practices for AI, according to Gartner. Through 2026, they predict 60% of AI projects will fail due to insufficient data readiness.

3. Technology-First Thinking

RAND calls this "chasing the latest and greatest advances in AI for their own sake." It's the impulse to use LangChain because it's popular, or CrewAI because multi-agent systems sound impressive, rather than because they solve your actual problem.

4. Inadequate Infrastructure

Poor data governance and model deployment capabilities doom many projects before they even start. IDC found that almost half of AI project failures are attributed to poor infrastructure and weak deployment strategies.

5. Problems Too Difficult for AI

Some tasks are simply beyond current AI capabilities. Knowing when to walk away is as important as knowing how to build.

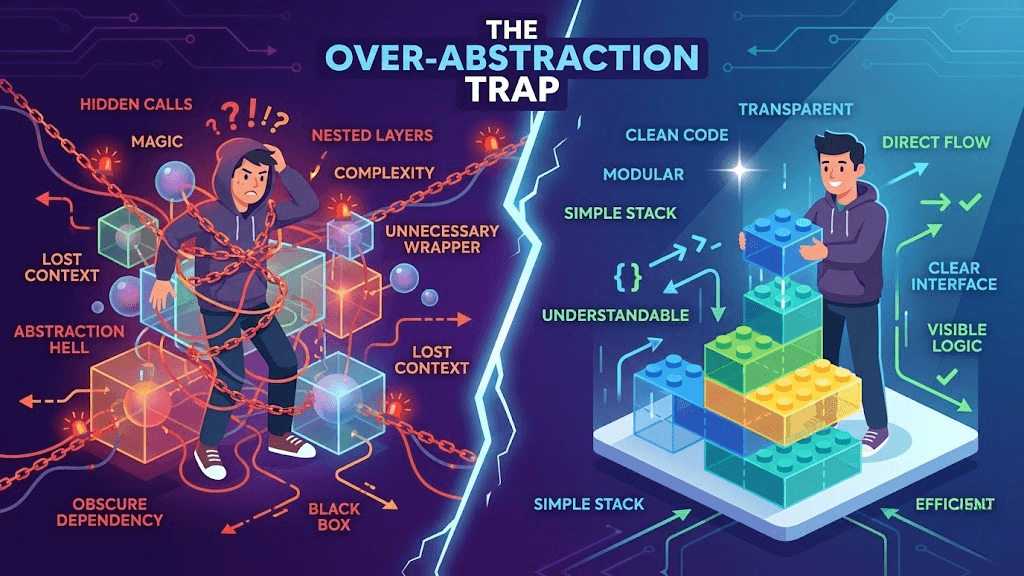

The Problem with Over-Abstraction

Here's where things get personal. The AI development community is flooded with frameworks that promise to make everything easy. LangChain, CrewAI, AutoGen, PydanticAI... they all sound great in demos. In production? That's a different story.

Let me be clear: the problem isn't frameworks themselves. Every production system uses libraries and frameworks. The problem is frameworks that add so many layers of abstraction that you lose control over what's actually happening. The distinction matters:

Over-abstracted frameworks hide complexity behind "magic," make hidden API calls, and force you into rigid patterns

Transparent toolkits give you building blocks while staying out of your way, letting you see and control everything

The former fight you. The latter help you. Here's what I mean.

LangChain

LangChain quickly became a go-to for many developers, and its modularity is often praised. But this modularity can devolve into a configuration nightmare. One of the biggest pain points is the lack of control over autonomous agents. The framework often makes hidden calls to LLMs, chaining requests in ways that aren't transparent.

I once had a simple document analysis task balloon into hundreds of dollars in API costs because LangChain was making multiple hidden LLM calls I couldn't see or control. The framework's layers of abstraction made it nearly impossible to understand what was happening under the hood.

Real companies are waking up to this. Octomind used LangChain for a year to power AI agents for software testing. They found its abstractions too inflexible as they scaled, especially for complex architectures like agents spawning sub-agents. After removing LangChain entirely in 2024, they reported: "Once we removed it... we could just code." No longer being constrained by the framework made their team far more productive.

CrewAI

CrewAI offers a more structured approach to multi-agent systems, focusing on role-playing agents that collaborate on tasks. This structure can also be its weakness. The framework can be rigid, making it difficult to dynamically adjust roles or delegate tasks mid-workflow. You spend more time fighting the framework's opinions than building your actual application.

Want to slightly modify how agents communicate? Good luck navigating their rigid crew-based programming model.

PydanticAI

When PydanticAI was announced, I had high hopes. Built by the team behind Pydantic, it promised to bring "that FastAPI feeling to GenAI app development." To their credit, they shipped v1 in September 2025 with real API stability commitments after nine months of iteration.

But there's still the vendor lock-in concern. Pydantic raised $12.5M in October 2024, and their revenue strategy is tied to Logfire subscriptions. While they claim Logfire integration is optional, "make the experience of using PydanticAI with Pydantic Logfire as good as it can possibly be" is a stated priority. This creates an uncomfortable dynamic where the "best" experience requires their paid product.

What They All Share

What do these frameworks share? They prioritize impressive demos over reliable systems. They promise AGI-level capabilities when, as someone with 15 years of experience in AI development, I know we're just not there yet. They focus on buzzwords and marketing rather than the boring but essential work of building maintainable software.

The issue isn't using libraries or toolkits. It's using ones that add complexity without transparency. When a framework makes it harder to debug than writing the code yourself would be, something has gone wrong.

What you want is the opposite: tools that make the simple things simple and the complex things possible, without hiding what's happening. Tools where you can set a breakpoint and actually understand the execution flow.

The Principles That Actually Work

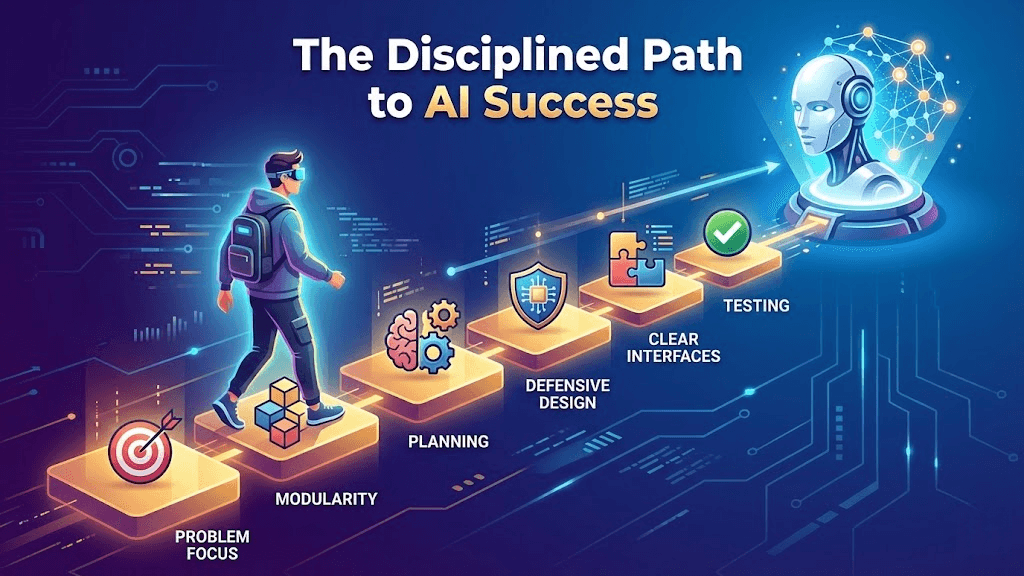

So what does work? After years of building AI systems (and watching them fail and succeed), I've identified six principles that separate the 20% from the 80%. These aren't theoretical: they're the same principles that informed the design of Atomic Agents, a toolkit I created after wrestling with these frustrations.

Atomic Agents is deliberately minimal. It gives you typed building blocks (agents, tools, context providers) without hiding what they do. There's no "magic orchestration" or hidden LLM calls. You write normal Python, you can debug with normal tools, and you always know exactly what's happening. That's the difference between a toolkit that helps you and a framework that fights you.

Principle 1: Embrace Modularity (Think LEGO Blocks)

Design your AI system as a collection of small, interchangeable components rather than one giant all-knowing model. Build with LEGO blocks, not one big block. Each part of your agent (a sub-agent, a tool, a prompt module) should have a single, well-defined purpose.

Why go modular? It's much easier to test, debug, and maintain a set of simple components than a monolithic tangle of logic. If your web-scraping tool throws errors, you can fix or swap out just that tool without rewriting the entire agent. Modularity also makes your agent more extensible: you can add new skills or upgrade components (switch to a better model, a different database) with minimal fuss.

By breaking a complex task into smaller atomic steps, you gain control and predictability. Instead of hoping a single black-box model magically figures everything out, you orchestrate a series of manageable steps. It's the classic software engineering wisdom of separation of concerns: keep each piece of logic separate and your whole system stays sane.

In practice, a modular agent might have distinct components for fetching data, summarizing content, and sending an email, rather than one model prompt trying to do all of those at once. When something goes wrong (and it will), you'll know exactly which piece to tweak, and your whole agent won't crumble.

Principle 2: Implement Persistent Memory

If your agent doesn't remember anything beyond its current query, you're going to have a bad time. Real-world agents need long-term memory. Without persistent memory, an agent is like an amnesiac employee: it will ask the same questions or repeat the same mistakes over and over.

The MIT research highlights this as a key differentiator: "The main cause of the divide is not model quality or regulation, but the absence of learning and memory in deployed systems." Generic tools don't adapt to enterprise workflows. Systems that learn and remember are the ones that succeed.

By giving your AI agent a memory, you enable learning and personalization over time. Technically, this often involves plugging in a vector database or other storage mechanism to act as the agent's "external brain." The agent can embed and save key facts or conversation snippets, and later query them when needed.

Persistent memory turns your agent from a one-off problem-solver into a continuously improving assistant. It can refer back to what happened last week or what the user prefers, making its responses more relevant and avoiding redundant back-and-forth.

Principle 3: Plan and Orchestrate the Workflow

Handing an LLM-powered agent a complex goal and saying "go figure it out" is a recipe for chaos. Effective AI agents need a clear game plan. You or a higher-level controller should break big tasks into structured steps and coordinate the agent's actions. Don't rely on "magic autonomy" to handle multi-step operations reliably.

Even human teams have project managers and checklists. Your AI agent is no different. For example, if the agent's job is to research a topic and write a report, orchestrate it as: first call a research tool agent to gather facts, then have a writing agent produce a draft, then a proofreading module to check the draft. Each step feeds into the next in a controlled manner.

We've all seen the flashy autonomous agent demos that spawn dozens of sub-agents and let them talk to each other endlessly. Cool in theory; in practice, they often loop aimlessly or stray off task because nobody is steering. Research on multi-agent systems found failure rates between 41% and 86.7% across state-of-the-art open-source multi-agent systems. The failures don't stem solely from individual agent performance but from the ways agents interact, delegate, and interpret each other's outputs.

Design your agents like a well-choreographed play, not improv theater. You'll get far more consistent and reliable results when each move is planned and nothing is left entirely to chance.

Principle 4: Adopt Defensive Design

A robust agent is built with the expectation that things will go wrong. This means diligently validating outputs and inputs at every step and handling the unexpected gracefully instead of crashing or spewing nonsense.

Some defensive practices I swear by:

Input validation: Never trust incoming data blindly. Check that it's in the format and range you expect. Is that date field really a date? Is that JSON complete?

Assertions and sanity checks: After each agent step, verify the result makes sense. If your agent was supposed to output a JSON with a "result" field, assert that "result" is present and of the right type. If a summary should be under 100 words, enforce that.

Graceful error handling: Catch exceptions from API calls or model responses and implement fallback strategies. If the web search fails, maybe retry once or use an alternate source. If the LLM's answer is ill-formatted, have a plan to correct it.

Never assume your AI will get everything right on the first try. I've seen agents cheerfully produce invalid outputs (like JSON with missing brackets or extra commentary) as if nothing was wrong. The goal is to make the system robust: if something misfires, the agent should catch it and either recover or fail gracefully with a useful error message.

The research backs this up. 77% of businesses express concern about AI hallucinations. 47% of enterprise AI users made at least one major business decision based on hallucinated content in 2024. Trust but verify: every output that moves through your agent pipeline should be treated with a healthy dose of skepticism.

Principle 5: Define Clear Interfaces and Boundaries

Interfaces matter, even for AI. One reason many agent systems break is a lack of clarity in how components talk to each other or to the outside world. Explicitly define how your agent interfaces with tools, humans, and other systems.

If your agent calls external tools (APIs, databases, etc.), define each tool's interface as if you were defining a function in code. What inputs does it expect? What outputs will it return? Make sure the agent is aware of this contract. Use strict schemas for tool inputs and outputs so that the agent's outputs can be automatically validated.

Clear interfaces also mean consistent formatting. Decide on a format or style guide for responses and stick to it. Don't let the agent one day output a casual "Sure, done!" and the next day a verbose five-paragraph essay, unless that's intentional.

Setting boundaries is equally important. Define what the agent is supposed to do, and by extension what it's not supposed to do. If an incoming request falls outside its scope, a well-designed agent should recognize that and escalate to a human or a different system, rather than attempting something crazy.

Also, use the right tool for the job: if a task can be handled with a straightforward algorithm or database query, don't force your AI agent to do it via prompt. Parsing a date string or doing arithmetic is best done with deterministic code: less error-prone and cheaper. Let the AI focus on what it's best at.

Principle 6: Align with Reality

Design your AI agent for the real world, not just a glossy demo. This is where many projects fall flat.

First, keep the scope grounded in real needs. It's better to have an agent that does one or two things consistently well than a theoretical do-everything agent that in reality does nothing reliably. Be problem-centric: identify a concrete use case and let that drive your design.

Next, remember that the wild is messy. Users will phrase requests in odd ways. Data might come in noisy. APIs might return errors or slow responses. Design with these in mind. Also, consider performance and cost constraints from day one. If your agent requires 20 calls to GPT-4o for a single task, it might be too slow or expensive to deploy at scale. Look for optimizations: caching results, using cheaper models when precision isn't critical, simplifying the task flow.

Crucially, test your agent extensively in realistic scenarios. It's not enough that it works on a curated prompt you've tried a few times. Throw real-world edge cases at it. If your agent helps schedule meetings, test it with conflicting appointments, weird calendar formats, or unreasonable user demands.

An AI agent is a means to an end, not the end itself. The most elegantly engineered AI agent means little if it can't function robustly in the environment it was intended for.

The Build vs. Buy Decision

Here's something the MIT research uncovered that surprised many: internal AI builds succeed only one-third as often as vendor partnerships. Buying from specialized vendors has a 67% success rate, while building internally hovers around 22%.

This doesn't mean you should never build. But it does mean you should be honest about your team's capabilities, timeline, and the complexity of what you're trying to achieve. Sometimes the best engineering decision is recognizing when someone else has already solved your problem better than you could.

The research also found that mid-market organizations that empower line managers and use vendor partnerships can move from pilot to full implementation in about 90 days. Large enterprises building internally? Nine months or more.

The HBR Framework: Beyond Code

The Harvard Business Review offers a framework that goes beyond technical implementation. They call it the "5Rs":

Roles: Clarify ownership across the project lifecycle. Who's the business sponsor? Who owns the success metrics? Who monitors after launch?

Responsibilities: Define what success looks like beyond just launching. Include adoption rates, KPI ownership, monitoring plans, and retraining schedules.

Rituals: Establish consistent cadences. Weekly reviews, biweekly executive check-ins, post-launch monitoring. Their research found that establishing rituals cuts delivery times by 50-60%.

Resources: Create reusable templates, frameworks, and accelerators. Don't reinvent the wheel for every project.

Results: Define metrics that pair adoption with business impact before the project starts. Not after.

This matters because, as HBR puts it: "Many AI projects fail because leaders treat adoption as a tech purchase instead of a behavioral change problem."

Summary: The Path to the 20%

If you take one thing from this article, let it be this: AI project success is mostly about discipline, not technology.

The research is clear. RAND found that the most common failure cause is misunderstanding the problem. MIT found that 95% of pilots fail not because of model quality, but because of "vague goals, poor data, and organizational inertia." Gartner found that most abandonments happen because of unclear business value.

The 20% who succeed share common traits:

They start with problems, not solutions. They identify a concrete business need before picking any technology.

They build modular, testable systems. Each component has a single responsibility and can be debugged independently.

They plan and orchestrate deliberately. Nothing is left to "AI magic." Every step is intentional.

They design defensively. They assume things will go wrong and build systems that handle it gracefully.

They define clear interfaces. Every component knows exactly what it receives and what it produces.

They test in reality. Not just happy-path demos, but messy real-world conditions.

They're honest about build vs. buy. Sometimes the best solution is acknowledging someone else has solved this better.

They choose transparent tools over magic. If you're spending more time fighting your tooling than solving the problem, something is wrong.

The AI development community doesn't need more magic or AGI promises. It needs solid engineering principles applied to real problems. That's what separates the 20% from the 80%.

Stop chasing impressive abstractions. Start building systems that work.